What is a voice AI chip?

The voice AI chip, as the name suggests, is an AI chip that processes voice. Many people's first contact with voice AI chips may be smart speaker products such as Tmall Genie, Xiaoai and Xiaodu, because in these products, we can experience the interaction of intelligent voice, and the voice AI chip is intelligent voice interaction. foundation and core.

Figure | Schematic diagram of speech recognition

It is said that the AI chip track is very popular in recent years. In fact, speech recognition, natural semantic understanding (NLP) and machine learning occupy an important position in AI technology and are the basis of human-computer interaction, and the first two are related to speech recognition. Related, this is because speech recognition is not only convenient, but also a technology in human-computer interaction that is most in line with the form of human daily communication. It has been widely used in smart home and vehicle scenarios.

Why should speech recognition be moved from the cloud to the terminal?

Speaking of speech recognition technology, it goes back to around 2010. At that time, AI technology represented by neural networks made intelligent speech recognition possible, and it continued to mature in the subsequent wave of IoT and AIoT industries.

The early intelligent speech recognition was limited by the computing power requirements. The terminal did not have a dedicated chip to solve the problem of balancing computing power and power consumption costs. It was impossible to process intelligent speech recognition on the terminal. However, cloud processing relies on its natural content and services. The advantages of rapid iteration, convenient data collection and training, etc., determined that most of the speech recognition at that time was arranged in the cloud.

However, cloud-based speech recognition also has drawbacks. For example, it cannot achieve a stable real-time response, and even when the network crashes, there is no way to respond; important information must be transmitted through the network, there is a risk of being attacked and leaked, and user privacy and security cannot be guaranteed; in addition, It also has no advantages in terms of cost. In addition to continuous bandwidth consumption, cloud voice requires a large number of servers to run continuously in the background for voice processing. very expensive.

Faced with the advantages and disadvantages of cloud voice, enterprises represented by Qiying Tai Lun began to invest in the research of end-to-end voice recognition. However, we know that the demand points alone cannot support the rise of a new industry. To support an industry, it needs sufficient market capacity to realize the active flow of funds, which is conducive to the continuous iteration of products and forms a positive cycle.

According to data released by iResearch, the number of IoT device connections in China will reach 7.4 billion in 2020, and it is expected to exceed 15 billion in 2025. McKinsey, on the other hand, uses more dynamic data to show the growth rate of the Internet of Things, estimating that there are currently about 127 devices connected to the Internet every second.

However, in the face of the current Internet of Things volume, Huawei gave another data: most of the current consumer devices with IoT capabilities have only a connection activation rate of 5% to 20%. Why is this? The reason is that the operation interaction is too complicated. The solution to this complex problem is to add more "all-aged" voice to IoT connectivity.

Some people in the industry predict that the voice AI chip will rapidly develop to a market size of about 500 million to 1 billion per year. With the continuous expansion of the application and the continuous improvement of the penetration rate, the scale will continue to increase.

To sum up, whether it is scene demand or market capacity, it is promoting the implementation of end-to-end speech recognition. But how to land? It doesn't happen overnight.

Three development stages of end-to-end speech recognition chips

The challenges faced by speech recognition are different from image recognition. It does not require so much computing power, but it requires high algorithms. He Yunpeng, founder and CEO of Qiying Tai Lun, told Youfei.com: "This is because there are many application scenarios for speech recognition, so all kinds of noise are very diverse, including steady-state noise and non-steady-state noise. It is very difficult to perform high-accuracy speech recognition. Therefore, it is necessary to master the whole chain technology of intelligent speech algorithm to achieve better speech recognition, including front-end noise reduction processing of intelligent speech signal, intelligent speech recognition, speech synthesis, speech decoding, speech Big data processing and training, NLP and other technologies.”

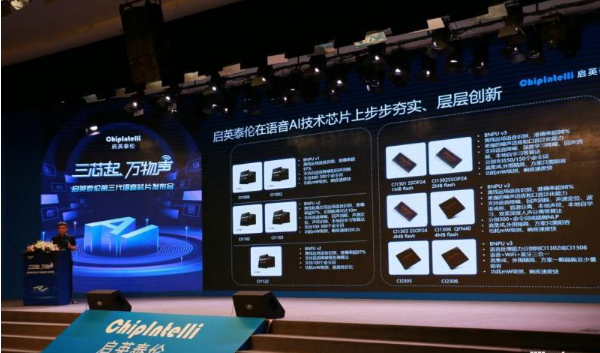

Of course, the development of speech recognition technology in China is also becoming more accurate and flexible in continuous iterations. We briefly summarize the three development stages of end-to-end voice AI chips with the third-generation self-developed technology platform BNPU (Brain Neural Network Processor) of Qiyingtai Lun.

Stage 1: Speech Recognition Function

The first-generation BNPU chip realizes end-to-end speech recognition. It is the industry's first speech AI chip integrated with a neural network processor, and it is also a sign of the rise of offline speech industry applications.

From the perspective of integration, the solution of BNPU 1.0 is relatively discrete, and the price of the solution is about 50 to 90 yuan (CI1006).

Stage 2: Offline voiceprint recognition + command word self-learning

The second-generation BNPU chip (CI1102/CI1103 and CI1122) not only realizes the offline speech recognition function, but also realizes personalized functions such as offline voiceprint recognition and command word self-learning. In application, the function configuration based on the user's personal preference can be realized according to the voiceprints of different people, and the recognition of local accents and dialects under mild noise can be realized through the self-learning of offline command words.

From the perspective of integration, BNPU 2.0 integrates Audio CODEC, Flash and other units, as well as dual-mic arrays to enhance processing capabilities. The price of the solution is about 15 to 25 yuan.

Stage 3: Deep Noise Reduction + Deep Separation + Command Word Self-Learning 2.0 + Offline NLP

The third-generation BNPU chip, in addition to inheriting the second-generation speech recognition and voiceprint recognition, also supports deep learning-based noise reduction technology (deep noise reduction), vocal separation technology (deep separation), and command word self-learning 2.0 Version technology, and the industry's first breakthrough offline NLP technology. In application, CI1301 can realize speech recognition in medium noise, while CI1302, CI1303, CI1306 and CI1312 can realize speech recognition in strong noise environment. well identifiable.

From the perspective of integration, BNPU 3.0 not only integrates Audio Codec's analog MIC interface, digital PDM microphone's DMIC interface, general ADC and MCU common serial ports, PWM , GPIO and other interfaces, but also further integrates 4-wire Nor Flash , 3-way LDO PMU and high-precision RC oscillator , etc., the price of the solution is about 10 yuan.

Regarding the question that the solution price of the third-generation products is lower than that of the first generation, He Yunpeng said: "Many people think that offline voice will incur a lot of costs on the device side, data processing, voice recognition and storage. In fact, the cost on the cloud is continuous. The cost is long, and this cost can be avoided; in addition, the early construction cost of the cloud is very high, many manufacturers will not be able to build it, and there is an annual operating cost, which is more than 10 yuan per device per year, which is when the device is sold. The manufacturer will pay for two years, but the customer will pay for it later, so it is not fair. With the development of Moore's Law , the overall cost of the device-side speech recognition solution has decreased by 30%, reaching the cost of a general-purpose MCU. cost."

In addition to the price, it is worth mentioning that NLP, which was originally considered to be only possible in the cloud, is now also implemented in the terminal-side intelligent voice chip , which not only ensures the user's sense of experience, but also reduces the Cloud construction and operation costs, reduce network bandwidth consumption, and improve user security.

Device-cloud integration is the ultimate destination of voice AI chips

Of course, when the cloud is moved to the terminal, in fact, in many scenarios, the terminal and the cloud are not in an either-or relationship. In the long run, the terminal and the cloud are mutually integrated and complementary.

As the function, performance, and reliability of the device-side voice chip become more and more powerful, and the price is getting lower and lower, the device-side voice recognition will realize the advantages of reliability, privacy protection, cost and flexibility, etc., plus The integrated networking function enables AIOT to realize effective data analysis and scenario utilization in the era of big data explosion.

Taking air conditioners as an example, most traditional air conditioners only have the function of adjusting temperature, but in today's differentiated competition, air conditioners of large manufacturers are developing in the direction of robots, which can provide more intelligent services, such as adjusting the temperature and humidity of the air, refreshing Levels, oxygen levels, PM 2.5 levels, as well as music in the morning for ambience, nutritional counseling, etc. The foundation of these services is voice interaction, and must be end-to-end voice recognition with lower latency and more stable work, while the underlying service transactions and user habit big data are more suitable for the cloud to achieve synergistic effects.

write at the end

Domestic voice AI chips are showing an explosive growth trend. Who will be the leader? He Yunpeng said: "After this market field develops and grows in the future, it will present a typical market-leading market-take-all situation. Qiyingtai Lun has multiple dimensions such as corpus data, algorithm models, chip architecture, AI development platforms and application solutions. The iteration of the 2018 has produced a Matthew effect. After nearly 7 years of development, Qiying Tai Lun has accumulated more than 5,000 B-end customers and more than 10,000 platform developers. There are more than 100,000 AI students in school. Today, the entire offline voice industry is developing rapidly, the installed capacity will exceed 20 million units within the year, and the annual shipment will reach 100 million units in the next two years.”